9 Incredible Movies About Florida You Need To See

You can learn a lot about the culture and history of a place by the art it inspires. Florida is often depicted as a sunny place for shady people, but there are plenty of films that paint it in a more positive light as well. In any case, you can’t deny that our state has some fascinating stories to tell.

The Florida Project is an independent film that came out earlier this year and was met with enthusiastic praise from critics. It follows the struggles of a young girl and her mother living in a motel in Kissimmee.

Key Largo stars Old Hollywood's golden couple, Humphrey Bogart and Lauren Bacall. This classic film noir features everything from gangsters to hurricanes. Key Largo's still-standing Caribbean Club makes an appearance in the film (though it was mostly filmed outside of Florida).

Advertisement

Scarface tells the story of drug kingpin Tony Montana's rise in Miami. It starred Al Pacino and Michelle Pfeiffer and became a pop culture classic.

Advertisement

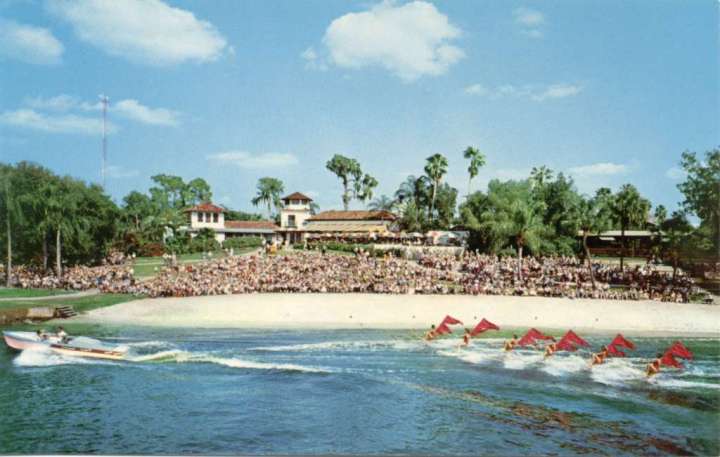

Cypress Gardens was one of Florida's first big tourist attractions, featuring gorgeous, elaborate gardens and water ski shows. The film is a romance featuring Esther Williams as a water ski performer.

This fascinating science-fiction film follows a group of residents in a retirement home searching for a fountain of youth. It was filmed in several locations in and around St. Petersburg.

Magic Mike stars Channing Tatum and Matthew McConaughey and is partly based on Tatum's experience working in Tampa area strip clubs. Because of this, you will spot several locations around Tampa in the film.

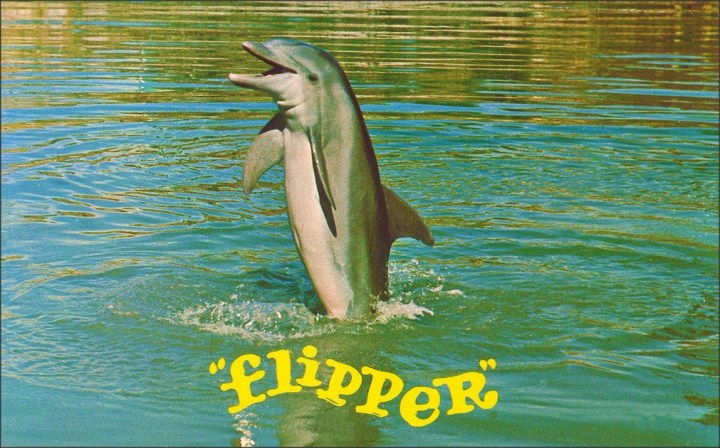

Floridians are famous for being semi-aquatic creatures themselves, and lots of movies have been filmed in and around our waters. Both the original and remake of this film follow the adventures of a young boy who lives in Florida and befriends a dolphin.

This fascinating biographical crime film tells the story of female serial killer Aileen Wuornos, who was eventually arrested at this dive bar in Port Orange and convicted of killing seven men while working as a prostitute.

Advertisement

Where The Boys Are is a teen dramedy about four young women on Spring Break in Fort Lauderdale. It was the film debut of Connie Francis, who also sang the title song.

How many of these Florida films have you seen? What’s your favorite movie about the Sunshine State?

OnlyInYourState may earn compensation through affiliate links in this article. As an Amazon Associate, we earn from qualifying purchases.